Data Transfer from Azure SQL to Snowflake: My Experience Using Slowly Changing Dimensions, Change Data Capture, and Column Data Masking with Fivetran

Creating a data pipeline from Azure SQL Server to Snowflake can be a complex and time-consuming task, but with the help of Fivetran, the process is made much simpler.

In this blog post, we will discuss the steps involved in creating a robust pipeline that includes the use of Slowly Changing Dimensions Type 2 for historical data, Change Data Capture for transferring only new data to Snowflake, and Column Data Masking for hiding Personally Identifiable Information (PII) for security reasons.

We use the Azure SQL Database like it is an OLTP Database from which we want to pull the data into the OLAP System.

This Blog is split into multiple parts:

Slowly Changing Dimensions Type 2 is a technique used to track changes to data over time.

It allows us to maintain a history of changes to data, which is essential when working with large datasets.

By using this method, we can ensure that our data is accurate and up to date, even as it changes over time.

Change Data Capture is another important component of our pipeline.

It allows us to only transfer new data to Snowflake, rather than having to transfer the entire dataset each time.

This can significantly reduce the time and resources required to transfer data, making the process more efficient.

Finally, we have Column Data Masking.

This is a security measure that is used to hide Personally Identifiable Information (PII) from the data.

By using this technique, we can ensure that sensitive information is not exposed, helping to protect the privacy of our users.

Part1

Azure SQL Server Setup

The first step in creating our pipeline is to set up an Azure Database.

This can be done by following the steps outlined in this tutorial:

Once our Azure Database is set up, we can connect to it using Azure Data Studio.

You can use this tutorial:

Next, we will create the necessary tables for our pipeline.

In this example, we will only be using two small tables: a "customer" table and a "banking_details" table.

The code for creating these tables is as follows:

In the customer table, we've created the columns for 'id', 'first_name', 'last_name', 'email', 'street', 'city' and 'zip_code' and set 'email' as the unique key.

In the banking_details table, we've created columns for 'id', 'customer_id', 'type', 'cc_number' and 'expiration_date' and set 'cc_number' as the unique key.

We've also added a foreign key 'customer_id' referencing the 'id' column in the customer table.

With our tables created and set up, we can now insert some fake data into them.

As mentioned earlier, we would usually use Column Data Masking on the data, but for the purposes of this tutorial, we will not be doing that yet.

The code i used for inserting the data:

With the data inserted, we can now run a SELECT statement to see the results.

The SELECT statement we will use is as follows:

This will give us a table that includes all of the columns from both the "customer" table and the "banking_details" table, with the rows matching based on the customer_id in the banking_details table and the id in the customer table.

With our data in place, we can now move on to the next step in our pipeline: Change Data Capture. Change Data Capture (CDC) is a feature in Databases that allows us to track and capture insert, update, and delete activity on a table.

This is an important feature for our pipeline, as it allows us to only transfer new data to Snowflake, rather than having to transfer the entire dataset each time.

To enable CDC on our Azure SQL Server database, we will use the following SQL code:

This code activates CDC on our database and enables it for the "customer" and "banking_details" tables.

The "@role_name" parameter is set to "patrickadmin" in this example, but it can be set to any valid role name that has the necessary permissions.

It's important to note that you will need a standard tier S3 100 DTU database to enable CDC, which can be seen in the screenshot provided.

Part2

Snowflake Setup

To begin, we will create a free Snowflake trial account. This can be done by visiting the Snowflake website and signing up for the free trial.

Next, we will create a developer role in Snowflake aswell as a database.

We will use the following code to create the role and grant necessary rights to access the data:

This code will create a developer role, grant it access to a warehouse, and create a database named "PATRICKDB".

It also grants the developer role full access to the database and future schemas/tables.

Finally, the code switches to the developer role to perform any further operations.

As we continue to set up our Snowflake environment, the next step is to create a data warehouse schema within our database. This schema will be used to store and organize our data.

The following code can be used to create the data warehouse schema:

Now that we have our data warehouse schema set up, we can create the tables that will store our data within it.

The following code can be used to create the "CUSTOMER" and "BANKING_DETAILS" tables in our Snowflake schema:

These statements create tables named "CUSTOMER" and "BANKING_DETAILS" within our "DWH_DBO" schema, with the same columns and data types as the tables we created in Azure SQL Server.

It is important to note that Snowflake does not require primary keys or indexes to be created, as it automatically indexes data for optimal query performance.

In the next step we create a masking policy and add it to the columns we want to hide the sensitive data such as email addresses, street addresses, city names and zip codes, as well as credit card numbers.

The following code can be used to create and apply the masking policy to the appropriate columns in our "CUSTOMER" and "BANKING_DETAILS" tables:

By pulling the data into the Snowflake tables, the sensitive information will be hidden as per the masking policy we defined.

Next, we set up a separate user and warehouse specifically for use with the data integration tool Fivetran.

This allows Fivetran to access and load data without interfering with or slowing down other users' operations.

The code for creating this user, role, and warehouse, along with granting the necessary permissions, is as following:

Part3

Fivetran Setup

In this part of the process, we will set up Fivetran to connect to Snowflake and Azure.

First, we need to create a Fivetran account. This can be done easily by using the Partner Connect feature in Snowflake, which can be found under Admin > Partner Connect.

Here, you can search for Fivetran and connect it to your account.

Alternatively, you can manually create a new account and add all the necessary information like we will do.

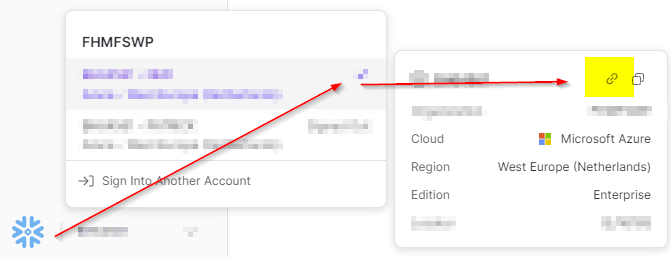

We need to obtain the host URL for Snowflake. This can be found by copying the account URL from Snowflake, as shown in the provided screenshot.

In Fivetran we will add a new destination and call it Snowflake.

- In the host field, we use the account URL without the http part.

- The port is 443.

- The user is FIVETRAN_USER

- The database is the name of your database (mine is PATRICKDB)

- The auth is PASSWORD, and the password is the one we used while creating the Fivetran user (mine is Patrick123).

- The role is FIVETRAN_ROLE

- The location is the location of your data warehouse (mine is EU)

- The provider is Azure

- The timezone is your timezone.

Finally, we can use Save & Test and if everything is correct, it will work.

Looks like it worked :)

Next we need a connector to our Azure Database.

In this step, we will set up Fivetran to connect to our Azure SQL Database.

- Go to Connectors in Fivetran and add a new one.

- Search for "Azure SQL Database" and select it.

- In the schema field, use "DWH"

- In the host field, use the server name found in the overview blade of the Azure SQL Database

- For example, "patricksserver.database.windows.net"

- Use port 1433.

- Use the login credentials for the Azure SQL Database. In this example, the user is "patrickadmin@patricksserver" and the password is "Patrick123"

- In the database field, use the name of the Azure SQL Database. For example, "patrick_azure_db"

- We have to add the IP's to our Firewall allowance in Azure. I have added the ips to my azure server under Networking blade like you can see on the screenshot

- Use the "Save & Test" button to ensure the connection is successful.

With the configuration provided above, Fivetran will automatically fetch the schema and tables from the Snowflake database.

In this example, we have chosen to include both the CUSTOMER and BANKING_DETAILS tables for this project.

Additionally, since we wanted to implement a Slowly Changing Dimension Type 2 and Change Data Capture pipeline, we activated it under the Connectors > Schema > Change to History, as seen in the screenshot provided.

Lastly, under the Connectors > Status tab, we initiate the initial synchronization and wait for the initial data load to complete.

Part4

Results

To verify the success of our data pipeline, we can now query the BANKING_DETAILS and CUSTOMER tables in Snowflake and review the output. Additionally, you should see the new columns generated in the manner of slowly changing dimensions (SCD).

To verify that our data masking policy is working correctly, we will switch to the user accountadmin (or any other user with permissions) and run the same queries we used earlier to retrieve data from the BANKING_DETAILS and CUSTOMER tables.

As expected, the sensitive information such as the credit card numbers will be masked and not accessible to this user.

This confirms that our data masking policy has been set up and implemented successfully.

Next, we will test the CDC functionality to ensure that it is also functioning as expected.

Now, in Azure Data Studio, we add a new row to both the CUSTOMER and BANKING_DETAILS tables.

Then, we manually run the sync in Fivetran to ensure that the changes are being captured by the CDC pipeline.

We can see that the new row has been added to both tables, and in the fivetran_sync column, it is evident that the data is untouched and only the new row has been added.

Next we are going to test the last thing.

Slowly changing dimension. When we update a new column, the active state, start and end should be changed.

Lets see on the next screenshot what will happen after we use this code and sync in fivetran.

As we can see, the update to the customer table was successful. The street for Jessica Jones has been updated to '122 New Street' and the previous street is now recorded as history with an active state of false. This demonstrates the functionality of the slowly changing dimension we set up earlier.

In the final step, we tested the history for deleted rows. By executing the following code in Azure:

The status was set to inactive.

Part5

The End

We have successfully set up a Snowflake data warehouse, created tables, implemented data masking and slowly changing dimension, and also integrated Fivetran for data pipeline and data replication.

We have also tested the system for data masking, CDC, and SCD, and we can see that it works as expected.

This project serves as an example of how easy it is to set up a data warehouse with Snowflake and Fivetran, and how powerful these tools can be in managing and protecting sensitive data.

Thanks for following along and i hope you found this helpful.

Thank you for reading.

I had a lot of fun working on this project.

Greetings

Patrick :)

Comments

Post a Comment